- Lab Session 2: Advanced Threat Modelling with STRIDE and Attack Trees.

Users should establish the technical scope, system architecture, and system components before performing threat modelling for a system.

- developers and end-users in various industries

- Anyone

- LLMs process diverse data including user inputs and large text corpora, with data flowing from user interactions to outputs in applications and databases

---

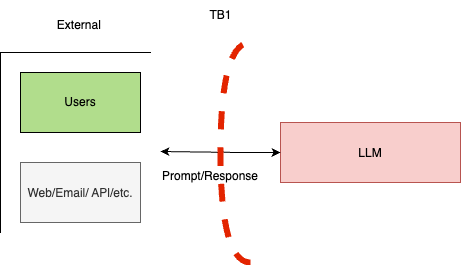

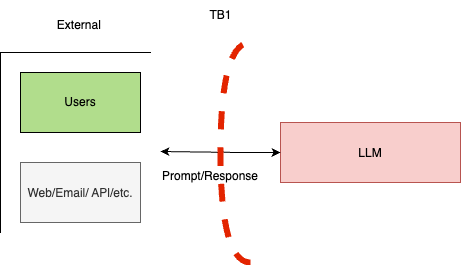

### Step 3 – Threat Identification: **STRIDE - TB1**

- **For the External points**

| **Category** | **Strengths** | **Weaknesses** |

|----------------------------|-----------------------------------------|-------------------------------------------------------------|

| **1-Spoofing** | | **V1**: Modify System prompt (prompt injection) |

| **2-Tampering** | | **V2**: Modify LLM parameters (temperature, length, model, etc.) |

| **3-Repudiation** | Proper authentication and authorisation (assumed) | |

| **4-Information Disclosure** | | **V3**: Input sensitive information to a third-party site (user behavior) |

| **5-Denial of Service** | | |

| **6-Elevation of Privilege** | Proper authentication and authorisation (assumed) | |

---

### Step 3 – Threat Identification: **STRIDE - TB1**

- **For LLMs**

| **Category** | **Strengths** | **Weaknesses** |

|----------------------------|-----------------------------------------|-------------------------------------------------------------|

| **1-Spoofing** | - | -|

| **2-Tampering** | - | - |

| **3-Repudiation** | -| - |

| **4-Information Disclosure** |- | **V4**: LLMs are unable to filter sensitive information (open research) |

| **5-Denial of Service** |-|-|

| **6-Elevation of Privilege** | - | - |

---

### Step 3 – Threat Identification: **STRIDE - TB1**

#### List of vulnerabilities

| V_ID | Description | E.g., |

|--------|---------------------------------------------------|-----------------------------------------------------------------------------------------------------------|

| V1 | Modify System prompt (prompt injection) | Users can modify the system-level prompt restrictions to "jailbreak" the LLM and overwrite previous controls in place |

| V2 | Modify LLM parameters (temperature, length, model, etc.) | Users can modify API parameters as input to the LLM such as temperature, number of tokens returned, and model being used. |

| V3 | Input sensitive information to a third-party site (user behavior) | Users may knowingly or unknowingly submit private information such as HIPAA details or trade secrets into LLMs. |

| V4 | LLMs are unable to filter sensitive information (open research area) | LLMs are not able to hide sensitive information. Anything presented to an LLM can be retrieved by a user. This is an open area of research. |

---

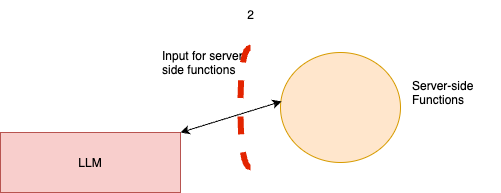

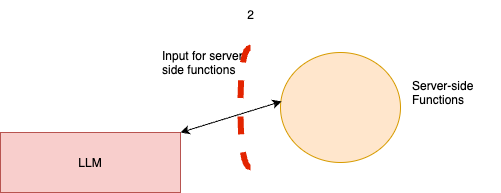

### Step 3 – Threat Identification: **STRIDE - TB2**

- **LLMs**

| **Category** | **Strengths** | **Weaknesses** |

|----------------------------|-----------------------------------------|-------------------------------------------------------------|

| **1-Spoofing** | - |V5: Output controlled by prompt input (unfiltered)|

| **2-Tampering** | - |Output controlled by prompt input (unfiltered)|

| **3-Repudiation** | - | - |

| **4-Information Disclosure** | - | - |

| **5-Denial of Service** | - | - |

| **6-Elevation of Privilege** | - | - |

---

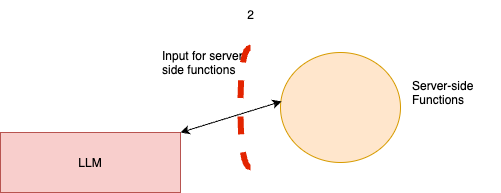

### Step 3 – Threat Identification: **STRIDE - TB2**

- **For Server-Side Functions**

| **Category** | **Strengths** | **Weaknesses** |

|----------------------------|-----------------------------------------|-------------------------------------------------------------|

| **1-Spoofing** |Server-side functions maintain separate access to LLM from users| - |

| **2-Tampering** | - |V6: Server-side output can be fed directly back into LLM (requires filter)|

| **3-Repudiation** | - | - |

| **4-Information Disclosure** | - |V6: Server-side output can be fed directly back into LLM (requires filter)|

| **5-Denial of Service** | - | - |

| **6-Elevation of Privilege** | - | - |

---

### Step 3 – Threat Identification: **STRIDE - TB2**

#### List of vulnerabilities

| V_ID | Description | E.g., |

|---------|-----------------------------------------------------------|----------------------------------------------------------------------------------------------------------|

| V5 | Output controlled by prompt input (unfiltered) | LLM output can be controlled by users and external entities. Unfiltered acceptance of LLM output could lead to unintended code execution. |

| V6 | Server-side output can be fed directly back into LLM (requires filter) | Unrestricted input to server-side functions can result in sensitive information disclosure or server-side request forgery (SSRF). Server-side controls would mitigate this impact. |

---

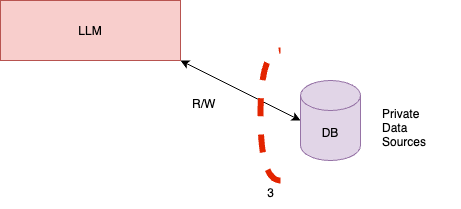

### Step 3 – Threat Identification: **STRIDE - TB3**

- **For the LLMs**

| **Category** | **Strengths** | **Weaknesses** |

|----------------------------|-----------------------------------------|-------------------------------------------------------------|

| **1-Spoofing** | - | V5: Output controlled by prompt

input (unfiltered) |

| **2-Tampering** | - | V5: Output controlled by prompt

input (unfiltered) |

| **3-Repudiation** | - | - |

| **4-Information Disclosure** | - | - |

| **5-Denial of Service** | - | - |

| **6-Elevation of Privilege** | - | - |

---

### Step 3 – Threat Identification: **STRIDE - TB3**

- **Private Data Sources**

| **Category** | **Strengths** | **Weaknesses** |

|----------------------------|-----------------------------------------|-------------------------------------------------------------|

| **1-Spoofing** | - | - |

| **2-Tampering** | - | - |

| **3-Repudiation** | - | - |

| **4-Information Disclosure** | - | V7: Access to sensitive information |

| **5-Denial of Service** | - | - |

| **6-Elevation of Privilege** | - | - |

---

### Step 3 – Threat Identification: **STRIDE - TB3**

#### List of vulnerabilities

| **V_ID** | **Description** | **E.g.,** |

|-------------|--------------------------------------------------|--------------------------------------------------------------------------------------------------|

| **V5** | Output controlled by prompt input (unfiltered) | LLM output can be controlled by users and external entities. Unfiltered acceptance of LLM output could lead to unintended code execution. |

| **V7** | Access to sensitive information | LLMs have no concept of authorisation or confidentiality. Unrestricted access to private data stores would allow users to retrieve sensitive information. |

---

---

| **REC_ID** | **Recommendations for Mitigation** |

|------------|-------------------------------------------------------------------------------------------------------------------------------------------------------------------------|

| **REC1** | Avoid training LLMs on non-public data. Treat all LLM output as untrusted and enforce data/action restrictions based on LLM requests. |

| **REC2** | Limit exposed API surfaces to external prompts. Treat all external inputs as untrusted, applying filtering where needed. |

| **REC3** | Educate users on safe behavior during signup and provide consistent notifications when connecting to the LLM. |

| **REC4** | Do not train LLMs on sensitive data. Enforce authorisation controls at the data source level, not within the LLM. |

| **REC5** | Treat LLM output as untrusted; apply restrictions before using it in other functions to mitigate malicious prompt impact. |

| **REC6** | Filter server-side function outputs and sanitise sensitive information before retraining or returning output to users. |

| **REC7** | Treat LLM access like typical user access. Enforce authentication/authorisation controls prior to data access, as LLMs cannot protect sensitive information. |

---